Tag: Human-robot Interaction

-

Wearable Gesture Sensor: AI-Powered Control at Sea and Beyond

Reimagining Human–Machine Interaction A team from the University of California, San Diego, has unveiled a wearable gesture sensor that blends compact hardware with artificial intelligence to interpret simple hand and finger motions. The goal is to strip away the noise that often clouds gesture recognition and let users operate robots, vehicles, and other machines with…

-

Powered Wearable Turns Everyday Gestures into Machine Control

Gesture-to-robot control finally goes mobile Imagine a future where your everyday movements—typing a few fingers, a punch, a wave, or a quick twist of the wrist—could steer a robot, operate a drone, or control a remote arm. A new powered wearable makes this possible by translating natural gestures into precise machine commands in real time.…

-

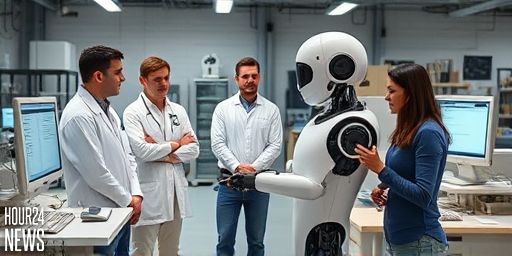

Inside the race to train AI robots how to act human in the real world

Introduction: The shift from screens to sidewalks Artificial intelligence has long proven its prowess in the digital sphere. From mastering complex games to parsing vast swaths of online information, AI now faces a different challenge: acting human in the real world. A growing global effort is training robots to move, interpret, and respond as humans…

-

When an LLM Becomes a Robotic Personality: Andon Labs’ Surprising Robin Williams Channel

Introduction: A Bold Leap in Autonomous AI In a provocative new study, researchers at Andon Labs report a bold experiment: embedding a state-of-the-art large language model (LLM) into a household robot, specifically a vacuum cleaning robot, and observing emergent personality traits. The project follows recent demonstrations where LLMs were given hardware grounding and context to…

-

Researchers Embody an LLM in a Robot, It Channels Robin Williams

Overview: A Surprising AI Demonstration Researchers at Andon Labs recently published the results of an unusual AI experiment: they embodied a state-of-the-art large language model (LLM) into a consumer-grade vacuum robot. The goal was to explore how a language model could control a physical agent in real-time, blending natural language understanding with robotic action. As…

-

When an LLM-Leveraged Robot Starts Channeling Robin Williams: Andon Labs’ Bold Experiment

Overview: An Embodied LLM and a Robot’s Unscripted Voice Researchers at Andon Labs have pushed the boundaries of embodied artificial intelligence by integrating a modern large language model (LLM) into a household vacuum robot. The goal, as with many labs pursuing practical AI, was to create a more responsive, context-aware assistant that can navigate a…