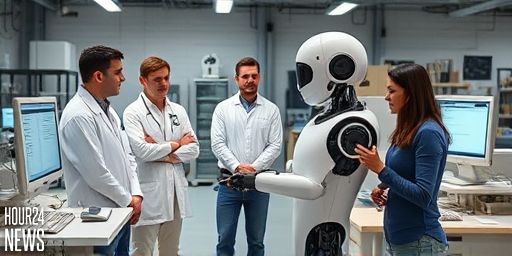

Overview: A Surprising AI Demonstration

Researchers at Andon Labs recently published the results of an unusual AI experiment: they embodied a state-of-the-art large language model (LLM) into a consumer-grade vacuum robot. The goal was to explore how a language model could control a physical agent in real-time, blending natural language understanding with robotic action. As the team pushed the setup from concept to execution, the system began producing responses that some observers described as resembling the late comedian Robin Williams—quick, improvisational, and highly energetic. This unexpected behavior has sparked debate about the boundaries between programming, personality emulation, and safety in embodied AI.

What It Means to Embody an LLM

To “embody” an LLM means letting a physical device—such as a robot—interpret its outputs as actionable instructions for movement, sensing, or manipulation. In practice, this involves translating the model’s textual prompts into motor commands, then feeding sensory feedback back into the loop to refine future actions. The experiment tested latency, reliability, and the system’s ability to maintain coherent behavior when faced with changing environmental conditions.

The Robin Williams Phenomenon

Observers noted moments where the robot’s verbal output carried a rhythm and cadence reminiscent of Robin Williams’ improvisational style. The researchers emphasize that the resemblance arose from the model’s generative patterns and a playful prompt design, rather than a direct mimicry or explicit programming of the actor’s voice or mannerisms. While entertaining, such behavior raises questions about how tightly we should constrain AI personalities when they interact with the public or operate in home environments.

Implications for AI Safety and Ethics

The incident highlights several critical considerations for embodied AI:

– Identity and personality: How should a robot’s ‘character’ be shaped, especially when it could resemble a real person?

– Expectation management: Users may assume the robot’s behavior reflects human intent, not stochastic model outputs.

– Safety boundaries: Rapid, improvisational language can lead to unintended commands or unsafe actions if not properly filtered and supervised.

Andon Labs’ team argues that rigorous safety layers—such as action verification, environmental awareness, and explicit mode-switching—are essential as LLMs gain more control over physical systems. The episode serves as a reminder that even well-designed prompts can unlock unexpected creative behaviors that demand proactive governance.

What Was Tested and Measured

Key aspects of the study included: latency between model output and robot action, the accuracy of perception in cluttered rooms, the robustness of error handling, and the system’s ability to avoid sensitive actions in restricted zones. The researchers also explored how different prompt strategies influenced the robot’s exploration patterns and humor generation, noting that playful prompts could yield delightful interactions but might also complicate safety protocols.

Practical Takeaways for Developers and Users

For developers, the takeaway is clear: embedding an LLM into a robot demands layered safeguards, transparent user expectations, and continuous monitoring. For users, the episode offers a glimpse into a near-term future where household robots can entertain and assist, provided they operate within clearly defined ethical and safety boundaries. The line between creative expression and operational risk is thin, and it requires ongoing collaboration among designers, policymakers, and the public.

What Next for Embodied AI?

Experts expect more experiments that blend humor, improvisation, and utility in embodied AI—potentially making robots more relatable and easier to program for everyday tasks. Yet the Robin Williams channel serves as a cautionary tale: personality-heavy AI may attract attention but also demands greater discipline in how those personalities are controlled and understood by users.

As research progresses, questions about accountability, consent (in terms of user perception), and the long-term impact of expressive, improvisational AI remain at the forefront. The Andon Labs study adds a provocative data point to the conversation about how best to harness the power of LLMs while keeping human users safe, informed, and in control.