Introduction: The shift from screens to sidewalks

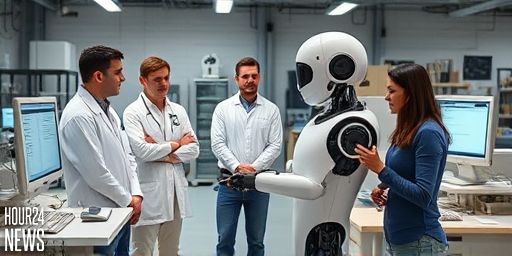

Artificial intelligence has long proven its prowess in the digital sphere. From mastering complex games to parsing vast swaths of online information, AI now faces a different challenge: acting human in the real world. A growing global effort is training robots to move, interpret, and respond as humans do, not just as pointed algorithms behind a screen. This is not about making machines clever in theory; it’s about making them useful, safe, and capable in everyday environments such as homes, offices, and public spaces.

How robotics companies teach humans’ motions and manners

At the core of this effort is the translation of human physics into robotic motion. Engineers combine perception, control, and learning to orchestrate fluid movement. Robots must understand gravity, balance, grip strength, and spatial awareness — all in real time. To achieve this, teams deploy:

- Sensor fusion to interpret heat, touch, vision, and proprioception (the sense of limb position).

- Motion planning that anticipates how a body should move to lift, walk, or fetch objects without colliding with the world.

- Imitation learning and reinforcement learning to mimic human actions while refining them through trial and error.

Robotics labs also simulate thousands of everyday scenarios to ensure a robot’s actions align with human expectations. The goal is not perfect replication of human behavior but reliable, intuitive interaction with people and objects in real settings.

Real-world tests: from lab benches to living rooms

Transitioning from controlled labs to actual homes reveals the gaps in current abilities. A robot might balance fine motor tasks in pristine conditions but stumble in cluttered rooms with uneven lighting, pets, or children. Real-world testing helps engineers identify critical issues: grasp reliability, route safety, social cues, and the ability to ask for help when unsure.

Test environments are becoming increasingly common in consumer electronics, hotel lobbies, hospitals, and research campuses. These trials measure how well robots interpret natural language, respond to human directions, and adapt to unexpected obstacles. Feedback from these tests informs iterative design loops, reducing the time between hypothesis and practical improvement.

Ethical and safety guardrails

As robots become more integrated into daily life, questions of safety, privacy, consent, and bias take center stage. Researchers must consider how a robot interprets a person’s intent, what it should do in ambiguous situations, and how to ensure it does not cause harm. Transparent failure handling, clear consent for data collection, and visible limitations are essential to maintaining trust as machines learn to act human in real environments.

The human side of the race: new skills and jobs

Bringing humanoid robots into living spaces creates demand for a new class of professionals: robotics trainers, field testers, and safety engineers. These roles blend software expertise with hands-on hardware know-how and people-skills. Companies increasingly partner with universities and research institutes to build talent pipelines that can design, test, and refine systems that “act human” while remaining controllable and safe.

What success looks like and what remains uncertain

Success means robots that can cooperate with humans in everyday tasks with minimal friction. They should understand human goals, anticipate needs, and communicate clearly when they require assistance. Yet fundamental challenges remain: achieving robust perception in variable lighting, making autonomous decisions that align with human values, and ensuring that learning from one environment generalizes well to another.

As the race to train AI robots to act human in the real world accelerates, the boundary between automation and companionship blurs. The most enduring progress will likely come from systems designed to support people rather than replace them — cooperative partners that enhance daily life while staying within trustworthy, well-governed limits.