Introduction: A new frontier in understanding ancient hands

Researchers at Griffith are exploring whether modern artificial intelligence can help identify the gender of ancient cave artists by studying finger flutings—one of the oldest forms of rock art. Finger flutings are marks formed when a finger strokes a soft mineral film called moonmilk on cave walls. By combining tactile experiments with virtual reality (VR) simulations, the team aims to learn if image-recognition methods can distinguish the sex of the finger flutists based on the art they left behind. This effort sits at the intersection of archaeology, cognitive science, and machine learning, and seeks to move beyond traditional, less reliable measurement methods.

Why finger flutings matter in digital archaeology

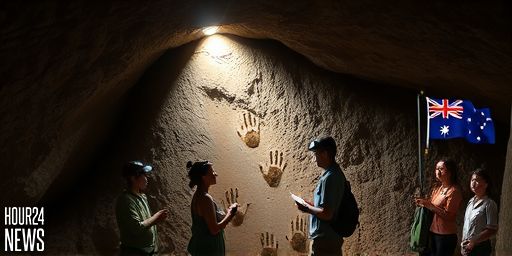

Finger flutings appear in pitch-dark caves across Europe and Australia, with some of the oldest examples in France dating to about 300,000 years ago and attributed to Neanderthals. Determining who left these marks can have real-world implications—informing access to sites for cultural preservation and contributing to debates about social organization in ancient communities. In the past, researchers relied on finger size, hand measurements, or pigment outlines to infer the maker. However, these methods can be inconsistent due to variable pressure, surface irregularities, and overlapping male-female size characteristics. The Griffith study shifts the focus from manual measurements to an evidence-based, data-driven approach that leverages digital archaeology techniques.

The study design: tactile realism meets VR experimentation

The project involved two controlled experiments with 96 adult participants. Each participant created nine flutings twice: once on a moonmilk clay substitute designed to resemble cave surfaces and once in VR using the Meta Quest 3 headset. High-resolution images captured every fluting, building a dataset suitable for image-recognition analysis. The researchers then trained two common image-recognition models on these images and assessed their performance using standard metrics. A critical aspect of the study was guarding against overfitting—ensuring that the model learned generalizable patterns rather than memorizing the specific dataset.

Key findings: tactile data shows more promise than VR in sex classification

Results were mixed but informative. The VR images did not yield reliable sex classification; although some moments showed acceptable accuracy, overall discrimination and balance were weak. In contrast, the tactile images performed significantly better. Under one training condition, models reached about 84% accuracy, with one model showing relatively strong discrimination. Yet, the team found that the models tended to pick up artefacts specific to the experimental setup rather than robust features of finger fluting that would generalize to other contexts. This highlighted an important lesson: data quality and experimental design are crucial for dependable AI-assisted archaeology.

From proof of concept to a replicable pipeline

Beyond results, the Griffith team established a complete computational pipeline linking realistic tactile representations and VR capture with an open machine-learning workflow. They released code and materials to enable replication, critique, and iterative improvement. Dr. Robert Haubt, co-author and Information Scientist at ARCHE, emphasized that sharing resources helps transform a proof of concept into a reliable tool for future research. The openness invites researchers from archaeology, forensics, psychology, and human-computer interaction to adapt the methods to other forms of sensory data and cultural analyses.

Implications and future directions

The study demonstrates how digital archaeology can reframe questions about ancient cognition and social practice. If AI models can be refined to generalize beyond the original dataset, researchers may better infer aspects of who created cave art, how communities organized access to sacred sites, and how early humans communicated through marks on rock surfaces. The work also points to broader interdisciplinary applications, including the analysis of other petroglyphs, the study of tactile learning in virtual environments, and the development of responsible AI tools that respect the ethical dimensions of cultural heritage research.

Conclusion: a collaborative path forward for archaeology and AI

Griffith’s digital archaeology framework illustrates the potential of combining tactile experimentation, VR simulation, and machine learning to investigate long-standing questions about ancient artists. While challenges remain—particularly in ensuring generalizable findings—the project provides a replicable, transparent workflow. As researchers continue to refine the models and expand the dataset, this approach could unlock new insights into the cognitive and cultural practices of early humans, deepening our understanding of humanity’s oldest forms of expression.